Interdisciplinary Scientist

Multisensory Tech

My research lies in the intersection between UX/UI, Engineering and Psychology.

I am passionate about creating UX/UI that augments or transforms reality to improve people's lives.

Virtual reality experiences are becoming more elaborate, and researchers and major technology companies are already building the interactions that will shape the future of our communications and entertainment in the digital world. However, most of these applications are limited to on-screen/on-device time. I argue that the key to unleashing the potential of these technologies is to explore new applications and platforms designed for a long-lasting impact that empowers the user in their daily life.

My research aims to build a bridge between the limited time one might spend in a virtual/augmented reality and extend its insights into our conventional reality. Among these are applications that aim to improve users' performance and well-being. Some of the experiences that I developed go from augmenting our reality for communication, entertainment, and creativity, to augmenting our cognition for memory, relaxation, and improved sleep quality. These experiences change the explicitness of the outputs (audio‑visuals and scent) in real‑time based on inputs that range from using motor responses in AR/VR to physiological signals and wearables.

About me

I'm a first-generation student from a small town in the Catalan Pyrenees, northern Spain. I love mentoring and offering my support to students and researchers, especially those who feel like misfits: minorities, non-traditional backgrounds, or those who struggled in the past.

I hold a Ph.D. and a master's degree from the Massachusetts Institute of Technology, a multimedia engineering degree from LaSalle University, Barcelona, Spain, and have a computer science background and design focused on UX, UI, and filming. I'm currently a Senior Researcher at Microsoft Research. In the past, I worked as a Research Fellow (postdoc) at the Massachusetts General Hospital and Harvard Medical School. I also worked at the the Google Creative Lab as a creative technologist and did two internships at Microsoft Research, developing virtual and augmented reality applications. I am also Research Affiliate at the MIT Media Lab and was co-president of VR/AR at MIT.

Recognitions

Best Paper and Demo Awards at premier venues in human-computer interaction and bioengineering, as well as the Scent Innovator Award by the Cosmetic Executive Women (CEW) and IFF (International Flavors and Fragrances). Facebook Graduate Fellowship, INK fellow, LEGO Foundation-sponsored research. Finalist of the Fast Company's Innovation by Design Awards and the 2021 Edison Awards for Essence Wearables that monitor physiology and release scent during the day and night to improve sleep, memory, and wellbeing.

Besides my research, I used to compete & instruct ski. My hometown was boring as hell & skiing was free, so that was pretty much what I did while growing up. I won 3 gold medals and was a finalist of the national slalom competitions in Catalonia. I was also a recognized individual by the Union of Sports Councils of Catalonia (UCEC). Growing up, I spent most of the time on my grandparents' farm, playing with animals and strolling through the mountains.

My awards, patents and research publications would have not been possible without the support from all my collaborators. Throughout the years, I had the chance to work with brilliant people like Jaron Lanier, with who I had the pleasure to write a patent and publication while I was at MSR, as well as all my wonderful years spent at the MIT Media Lab working with my colleagues, mentees and advisor Professor Pattie Maes.

Featured Work

AR & VR

Here is a selection of some of my work and talks on using technology to augment, extend or create a virtual reality for wellbeing, including mindfulness, creativity, entertainment, and learning that range from augmenting reality to augmenting humans.

Augmenting physiological signals for mindfulness & relaxation

Biofeedback has been used for decades as a mind-body intervention, gaining popularity in the 60’s. This technique helps patients to gain voluntary control over their involuntary, often unconscious physiological processes. It has been proved effective in treating many disorders like chronic pain, anxiety, among other psychological conditions. In this project I was interested in investigating the effects of unconscious biofeedback. Growing evidence suggests that non-conscious cues can significantly modulate cognitive processes. I hypothesize that unconscious body processes can be regulated by unconscious cues using subliminal biofeedback to increase relaxation. I am currently working on this hypothesis at MGH/HMS. More details here.

From Virtual Reality to Expanded Reality. A special program for ArtFutura 2016. Virtual Reality and Augmented Reality in films, video games, art, music, animation, education and storytelling. Included segments on my work in collaboration with Xavier Benavides and Pattie Maes when I was at the MIT Media Lab. It also includes the recent work of Mediamonks, Tippet Studios, Arnold Abadie, John Carmack, Sentient Flux, Keiichi Matsuda, “Uncanny Valley”, Clyde DeSouza, David Attenborough.

Before I started graduate school, I had been working at the intersection of Design, Engineering, and Psychology. As an undergraduate, I collaborated with psychologists to create an Augmented Reality (AR) application designed to be complementary to an arachnophobia treatment. The system monitored in real-time the heart rate of the patient and let the therapist change how intimidating the spider was. During my Masters and PhD, I continued bringing insights from neuroscience and psychology to inform the design of the UIs I developed, and I started experimenting with Brain Computer Interfaces (BCI). The kickoff project on BCI that me and my colleague Xavi developed was PsychicVR, which consisted of a Virtual Reality (VR) experience coupled with an EEG headband that provided real-time biofeedback of the user’s mental state and changed the 3D content accordingly. My goal was to gamify the meditation practice to help users overcome difficulty meditating and increase the interest of novices and children.

AutoEmotive started in a 24-hour IDEO Data-Driven Hackathon organized at MIT by the Volkswagen group. We were a team of 2 researchers from the Affective Computing group and 2 from the Fluid Interfaces group. The multidisciplinary team of interests in physiological sensing (predominantly Affective Computing researchers) and the UX/UI interactive aspect from us at the Fluid Interfaces group merged into the concept of bringing empathy to the driving experience. During the 24-hours, I worked on the idea and interaction and created the video showcasing our vision, which included some of the pre-existing emotional sensing technologies employed by Affective Computing.

We were the winning team of the hackathon selected by a panel of judges from IDEO, MIT, and the Volkswagen group and earned $5000. We later published a DIS paper. This work later resulted in collaborations with Bentley and sparked interests from other caar companies and a special interest group at the MIT Media Lab.

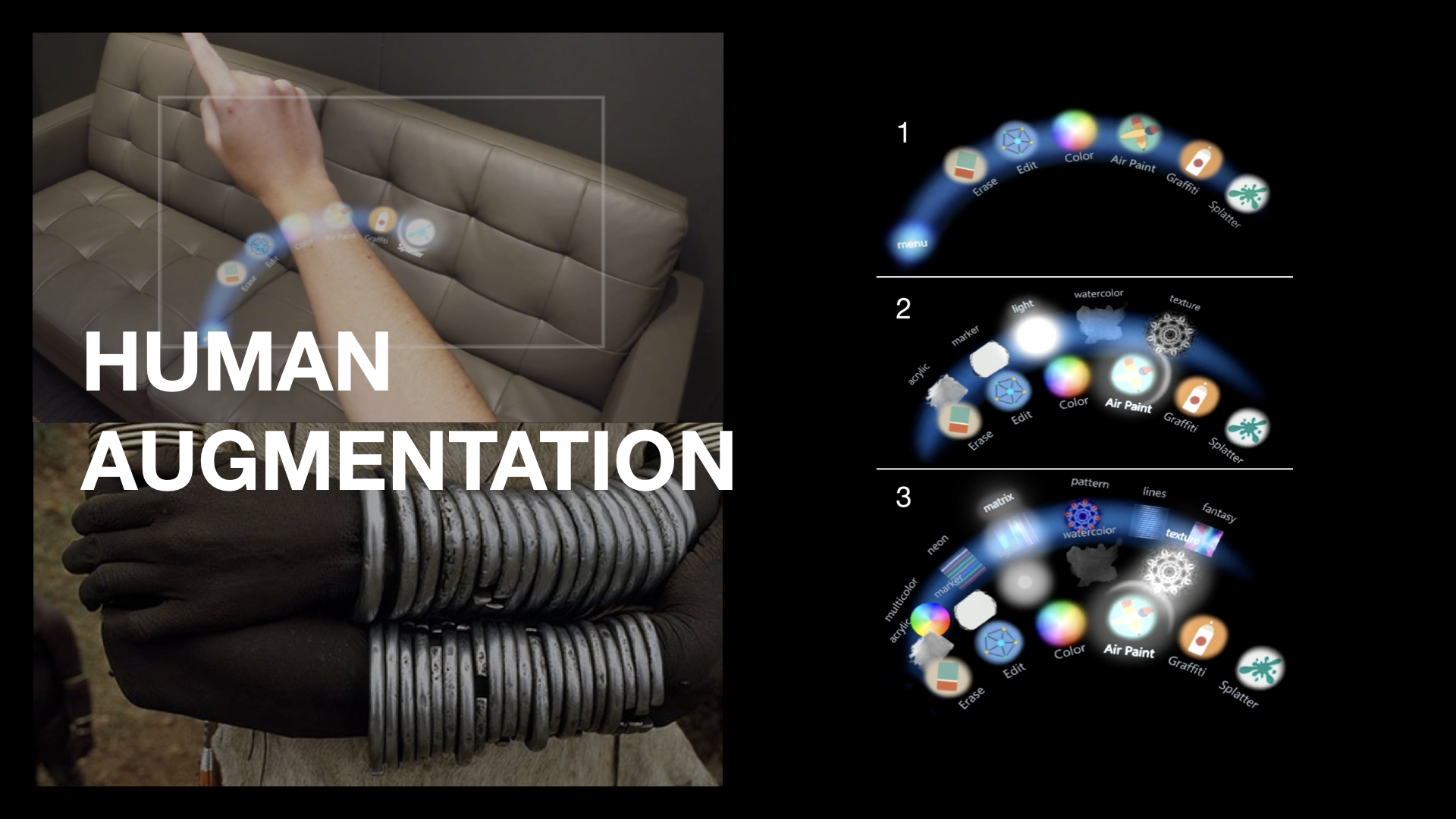

Audio-visual Augmentation for

Creativity

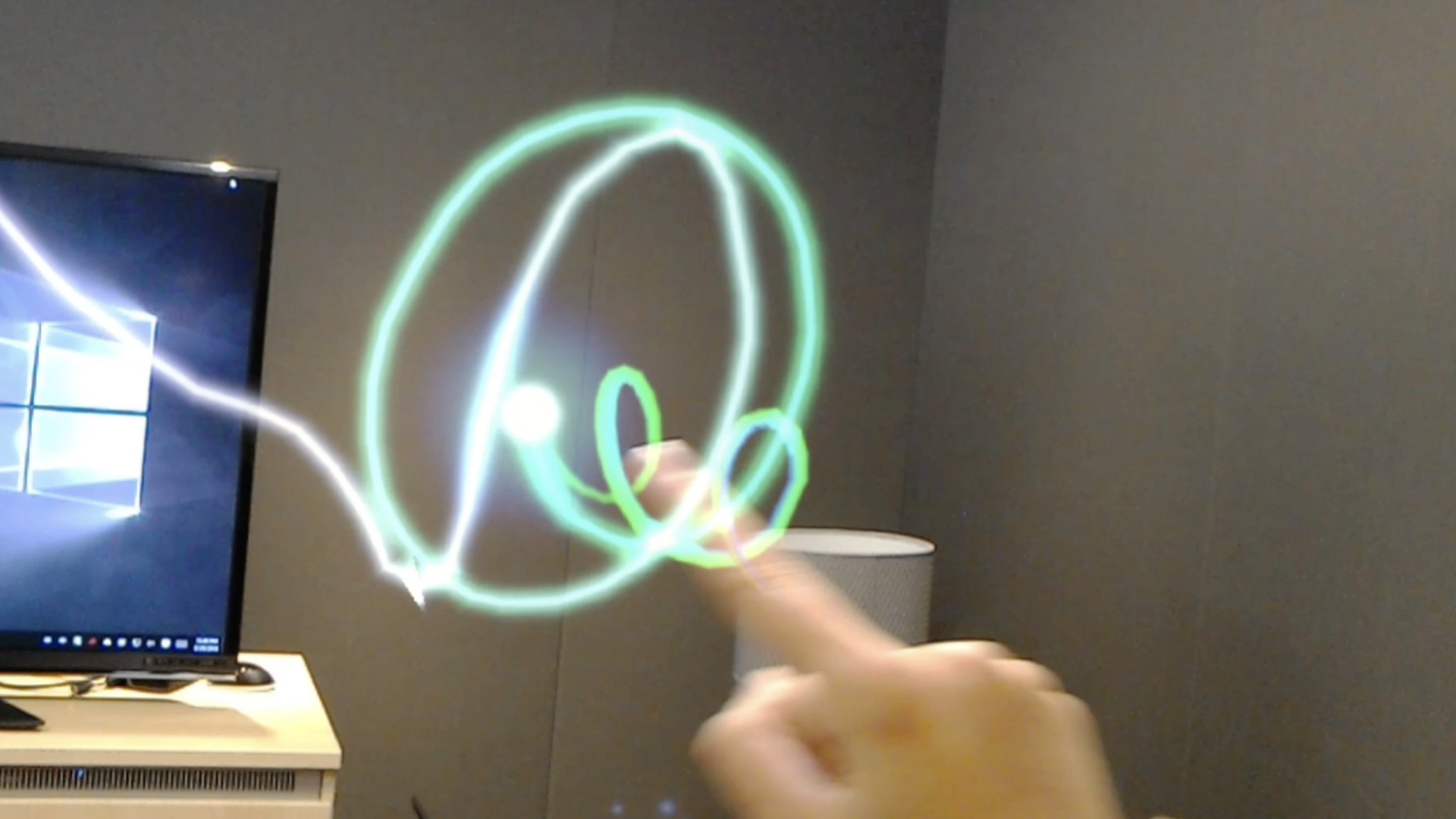

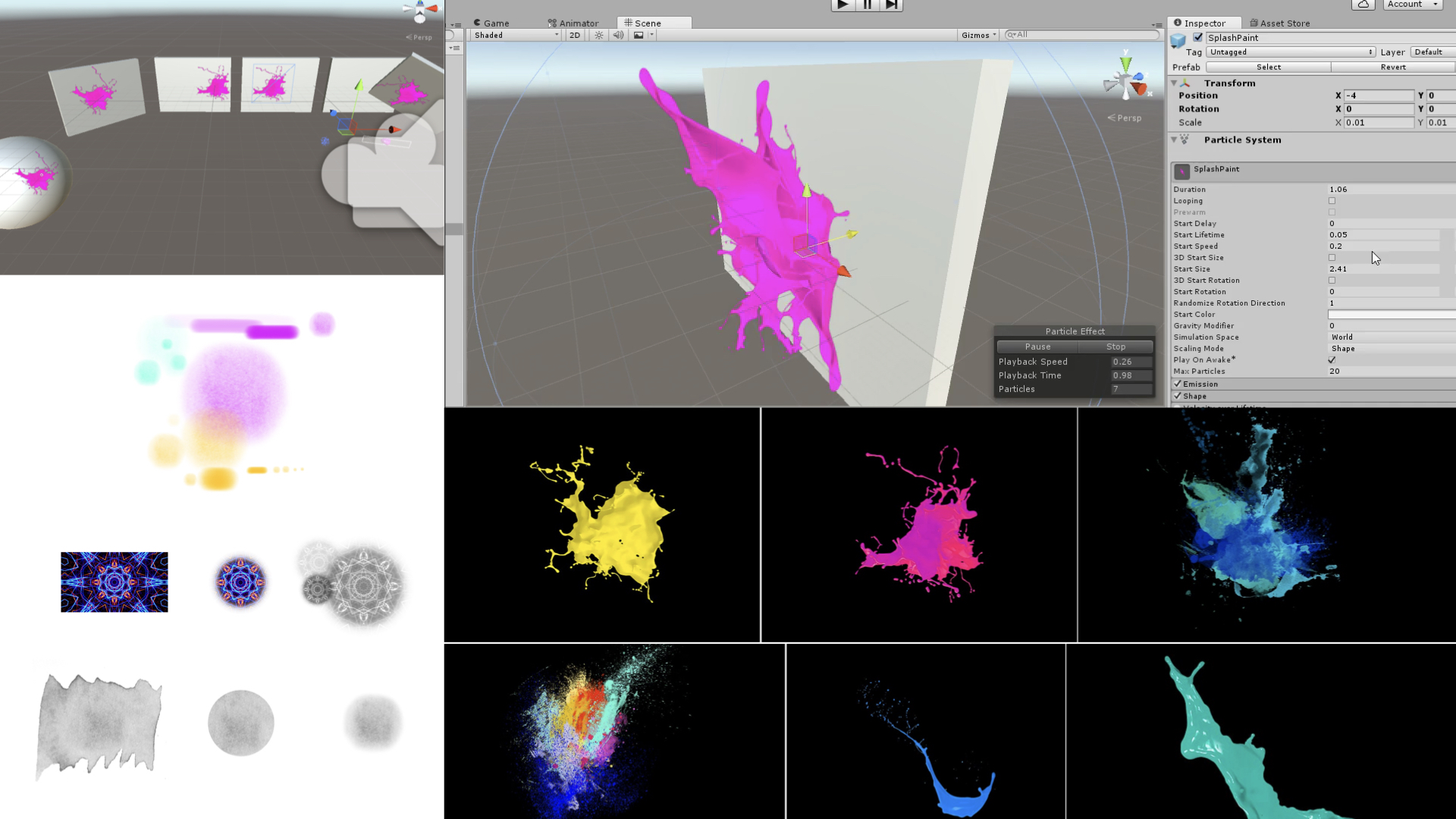

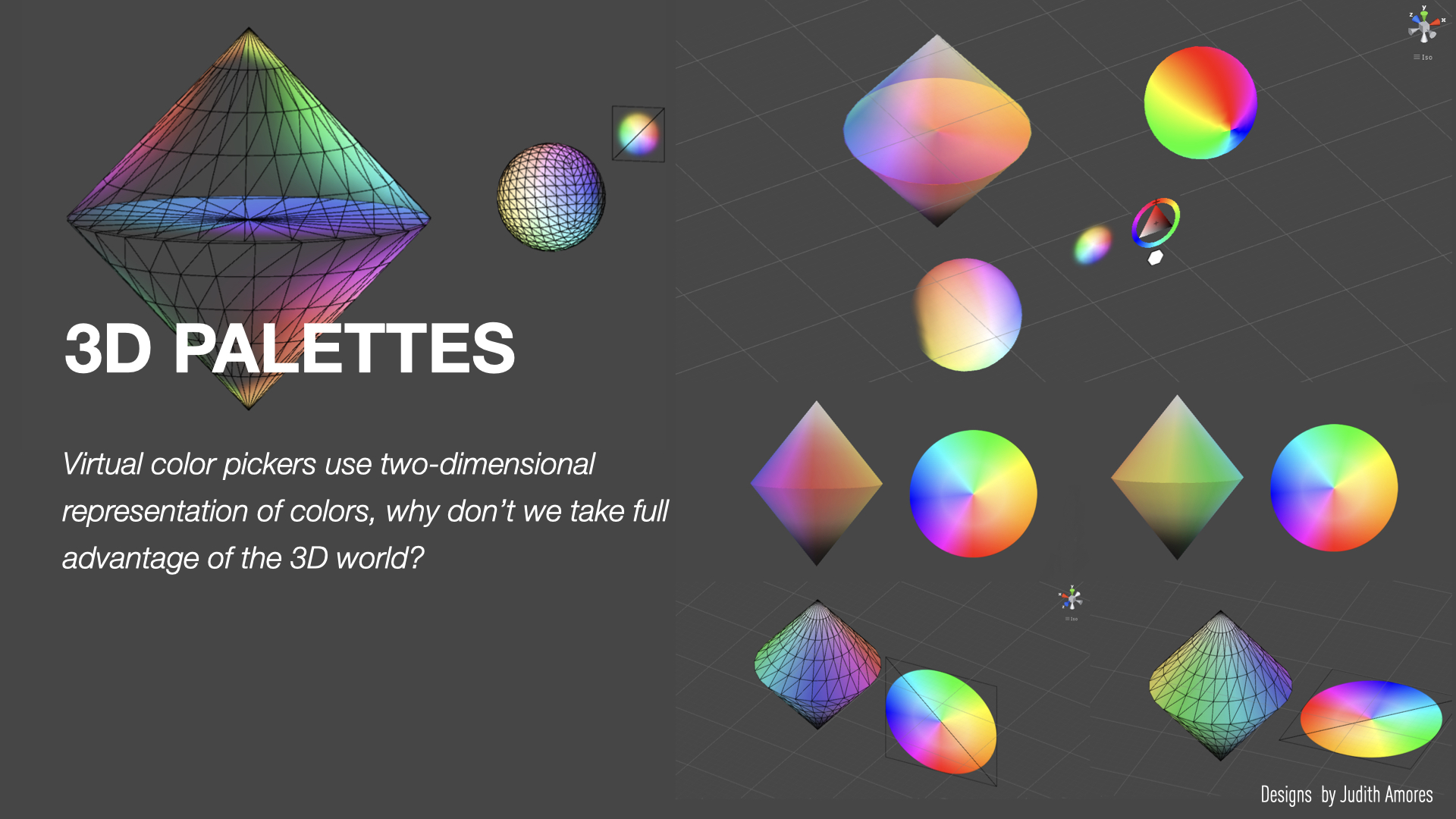

I built HoloArt during a summer internship at Microsoft Research (from idea to execution). I both coded and designed the app as well as the user interactions. I used C#, Unity, Adobe Photoshop & Illustrator. I trained the system to use voice commands and also track the arms. I brainstormed and ideated HoloArt with my mentor Jaron Lanier, a well-known pioneer and considered a founder of the field of virtual reality. We filed two provisional patents, and one patent was granted. I also presented a video-showcase and a short/EA paper at ACM CHI. You can check out his awesome book were he mentions the work we did together.

AR as a way to control and change physical objects for learning, entertainment & play

Me and my colleague Anna Fuste built the first AR Core apps for the AR Experiments website while we were at the Google Creative Lab with Amit Pitaru's group. We built (from idea to execution) 3 projects: Draw & Dance, Paper Cubes and Invisible Highway.

Draw & Dance was entirely built by us using Unity, Vuforia and ARCore. The motion tracking, environmental understanding and light estimation are made using ARCore. To make the stick figure come to life and react to your voice input we used API.AI. We programmed a conversational app with some potential voice inputs that the user might use. To understand what music is currently being played and change the dancing style, accessories and clothing, we used the Spotify API and a tree of possibilities in the Unity Animator with tones of prefabs for every music style and several iconic music groups. For Invisible Highway, we worked on the the idea and development of the robot and connection with Unity and ARCore. We then collaborated with a global design studio Jam3 who designed the 3D models and further developed the experience for its public release.

Tactile AR/VR

TactileVR was a project I worked on with Xavier Benavides and Lior Shapira while being at Microsoft Research. The project (from idea to execution) took 3 months. I worked on the vision of the project, user interactions, 3d environment, user study and coding. We wrote a paper and published it at IEEE ISMAR. We also filed a patent that was successfully granted. We later developed an adaptation of this project called "TactileAR" for a DIY augmented reality optical see-through HMD in collaboration with Jaron Lanier, another IEEE ISMAR paper with all the people involved in the development of the HMD was published (this HMD was developed prior to the first shipment of Hololens).

TactileVR user study with children 5-11 years old.

Video of TactileVR experience, showcasing the different user interactions.

In minute 3:15 we describe TactileAR.

Augmenting AI

to visualize neural networks and turn the invisible to visible

Paper Cubes is a DIY Augmented Reality platform that uses paper cube patterns and an AR app to teach computational concepts. In the last sprint of our project, we collaborated with David Ha & Jonas Jongejan during a hackathon between the Creative Lab and the Google Magenta Team to also add an "AI cube" that represented evolving 3D characters using a Recurrent Neural Network. Unlike existing ML approaches that typically pre-train the weights of a NN before deployment, we train the NN in real-time, while the user is playing with the cubes. We use an evolution stategy and a large population of stick figures to evolve a suitable set of weights that will guide the agents to survival. New stick figures learn from their assigned parents and over time these inherited traits will increase the chance of survival and, eventually, the stick figures learn to avoid the virtual & physical objects. It's very funny to watch them over time, they end up learning that to survive they need to stay quiet in the same spot, some of them start rotating within the same axis and go a bit mad :)

We published this work at the Machine Learning for Creativity and Design workshop at NeurIPS.

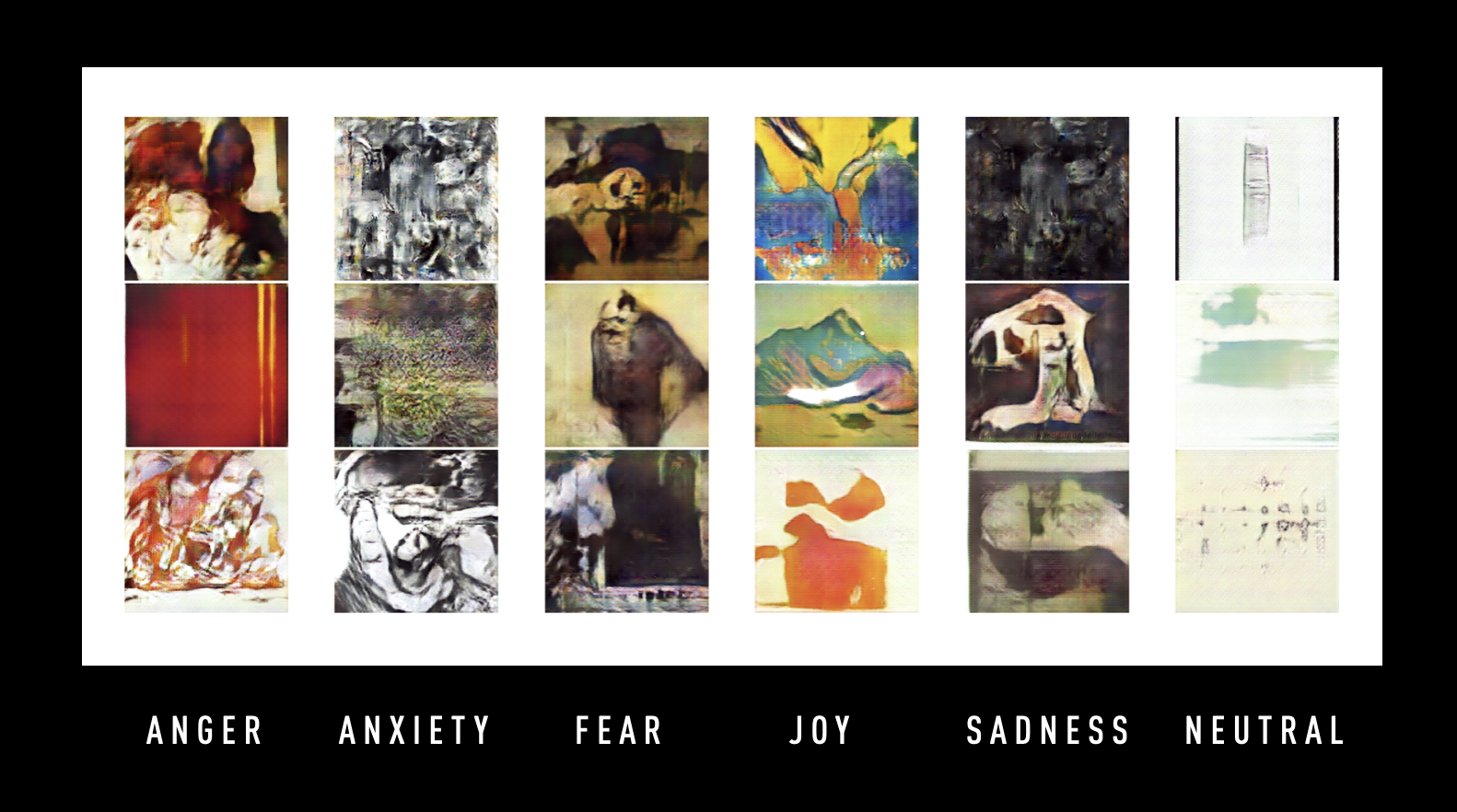

& turn emotion into art

The Emotional GAN is a project that David Alvarez and I developed during our spare time, cause we just love art (and needed some new decor). We created a class-conditioned GAN trained on modern artworks labeled with emotions. The dataset did not exist so we basically had to ask a bunch of people to label artworks from MOMA and WikiArt. Using these emotionally-labeled artworks, the machine is able to generate a completely new artistic style based on emotional cues. It was fascinating to see how our method generated a variety of styles with some high-level emotional features tha agree with previous psychology literature: e.g., bright colors for arousal, such as red for anger and dark colors for unpleasant and low arousal, such as sadness and fear. Joy is an emotional state with a medium-high arousal and positive valence, agreeing with the literature, our generated artwork has a mix of green-yellow and blue-green colors. In addition, we observed that many examples in the joy category resemble natural landscapes (also demonstrated to have positive psychophysiological effects).

We published this work at the Machine Learning for Creativity and Design workshop at NeurIPS, and it was supposed to be a permanent collection in our apartment but the resolution was too low, so it would have been better for postcards...

Olfactory Augmentation to

Improve memory, sleep &

mental health

I consider AR & VR experiences those that also augment the sense of smell. I believe in a future where we will digitize and sense odors. My Ph.D dissertation proposed "Olfactory Interfaces". More specifically, technology that uses smell as an implicit, subtle, and often less conscious output, but that still influences the person's cognition and pairs it with implicit physiological information as the input to the system. As part of this work, I developed the first biometric olfactory wearable and presented a set of guidelines to design UI for the sense of smell that can be used during the day and sleep. I also proposed a framework for human-computer interactions based on the state of consciousness of the user (e.g, while dreaming) and explicitness of the UI.

Augmenting Sleep and Dreams to

Improve sleep quality & memory

In 2016, I jump-started the research direction in our group of UIs for the sleeping mind. I presented as part of my Master thesis a pilot experiment to positively mitigate traumatic memories of a person with PTSD using olfactory and sound cues during slow-wave sleep. The results were promising and motivated me to miniaturize and automatize the device to use at home and to further explore HCI applications during my PhD.

Throughout the years I have been trying to bridge the worlds of sleep research with the HCI community to explore the future of sleep interfaces. I conducted sleep studies, collaborated with sleep researchers, and started a new initiative in our group for “Dream Engineering”. I co-organized an international workshop on Dream Engineering, with attendance from world leading sleep scientist experts as well as a new special issue in the Consciousness and Cognition Journal for ”Dream Engineering”.

The work presented here has also been showcased at the 2018 Ars Electronica conference, the 2018 Beijing Media Arts Biennale at the CAFA Art Museum and exhibited at Hanshan Art Museum. More info here.

Disclosure: The U.S. Air Force has not sponsored our research. Seeker is an independent digital media network & content publisher who decided the U.S. Air Force would sponsor this video. Believe it or not, we were not aware of this at the time of the filming. However, I am aware of the ethical concerns and controversies that this research can bring. I address some of these concerns in my dissertation.

From vision

To execution

Here is my workflow*:

0. FIND A PROBLEM & EMPATHIZE WITH THE USER

1. BRAINSTORM (the more the merrier)

2. INVESTIGATE

3. PROTOTYPE

4. CODE

5. BUILD

6. USER TEST

7. DATA ANALYSIS (Quantitative & Qualitative)

8. DOCUMENT/PATENT

9. PUBLISH

10. PRESENT/EXHIBIT/DEMO

*I prefer to execute this as part of a multidisciplinary group/research agenda or leading a small group of researchers towards the same goal.

Psst

You can check out some of my old projects here: MIT Media Lab, Vimeo, or Youtube.

Feel free to contact me; I am happy to answer any questions!